***A couple of weeks ago, I posted about AI and what I think it means for creators and creativity. I said some very positive things about it, and I said some very negative things about it, too. I outlined how I use it (never for personal bits, often for work). I also said there were a lot of separate issues with it that I didn’t want to get TOO into at the time, because I wanted to focus on the question at hand.

Today, we’re going for it. But first, let me just say I talk about women a lot, obviously, and every time I do so, I’m referring to ALL women. We’ll be having no exclusionary vibes here thank you very much.

As always, thank you for reading, and be prepared to buckle in for this one (again) x ***

Hi. I’m Ellen.

I have a tech content writing background, so I understand that AI is a big deal. I really do. Lifechanging applications, new levels of accessibility, instant translation, never before seen use cases, blah, blah, blah.

It’s all very revolutionary.

But I don’t think I understood exactly how revolutionary it could be for me until last week, when ChatGPT gave me a decent dinner recipe using the contents of a fridge that contained only a single courgette, two droopy spring onions, half a tub of Philadelphia cheese, six cans of Diet Coke, and a bottle of wine.

It was magical. I was blown away. I wanted to host a press conference about it. I messaged a group chat and said generative AI becoming mainstream was the best thing to ever happen to me.

In fact, every negative thought I’d ever had about AI disappeared.

Only to come rushing back a day later, when I heard my neighbour call Alexa a “stupid bitch” through my living room wall.

The problem with gendered AI

At first, I laughed.

I hadn’t made out exactly what Alexa was doing wrong at the time, or what she should have been doing instead, but I relate. We’ve all been pushed to the very limits of acceptable language by technology. In fact, I have an accent — Siri doesn’t understand, like, 80% of what I say, so I’ve probably been pushed there more than most.

But at the same time, a question was bubbling up inside me and making me feel like an irritating, overly-literal, killjoy. Would he still have said “stupid bitch”, if Alexa sounded like a man?

I don’t think so.

Now don’t get me wrong, calling Alexa a bitch does not make this guy the devil. I don’t know anything about him beyond the fact he plays a lot of heavy metal (at arguably antisocial hours). I can’t say for sure, but I’m going to optimistically assume he’s probably not a misogynistic swine, he’s probably just a guy who gets really, really frustrated when Alexa won’t load up his favourite screamo album.

But there’s a conversation here. The choice of words —however subconscious — is interesting, because it’s a very female-coded insult.

And while this specific anecdote might not be a huge concern in the grand scheme of things, it serves as a thread that, when pulled, unravels a whole load of potential problems for the gals.

The idea of the woman as the secretary

The first issue is simple. A lot of AI assistants are given female personas. It’s easy not to question it, but take a moment to think how weird it is that we’ve chosen to gender software at all??

What’s more, the likes of Alexa and Cortana are tools designed to take your notes, write your shopping list, schedule your day, etc. They’re basically acting as your personal assistant.

The fact these tools also give the impression of “female” perpetuates an irritating stereotype that women are the more subservient gender. The note takers. The coffee makers. The secretaries. The pretty little things there to look good and run around after the men.

And this stereotype isn’t just hurting our feelings, it’s hurting our job prospects, too. Research shows women in male dominated careers like engineering are still burdened with more administrative work and clerical tasks than their male counterparts are. To put it simply, we haven’t quite shaken off the idea that we’re here to take notes and make coffee, and Alexa isn’t helping.

The (scarier) idea of the woman as the submissive minion

If that wasn’t depressing enough, “secretary” isn’t the only harmful role female-voiced AI assistants end up taking on. This piece from a few years ago talks about the frequency of sexually aggressive or hostile comments towards female sounding AI tools, and what that means for human women:

“Because the speech of most voice assistants is female, it sends a signal that women are obliging, docile and eager-to-please helpers, available at the touch of a button or with a blunt voice command like ‘hey’ or ‘OK’,” the report said.

“The assistant holds no power of agency beyond what the commander asks of it. It honours commands and responds to queries regardless of their tone or hostility. In many communities, this reinforces commonly held gender biases that women are subservient and tolerant of poor treatment.”

“It cited research by a firm that develops digital assistants that suggested at least 5% of interactions were “unambiguously sexually explicit” and noted the company’s belief that the actual number was likely to be “much higher due to difficulties detecting sexually suggestive speech”.

So basically, while you’ve got one guy shouting “stupid bitch” at Alexa, you’ve probably got another a few streets over calling Cortana a slut and describing what he wants to do to her in graphic, violent detail.

And yes, it’s technology. Yes, it’s technically a victimless interaction. But don’t tell me it doesn’t make you feel a little bit uneasy.

Because, unfortunately, it doesn’t bode well for women in the real world. The people who do this are doing it because they view this vaguely female, submissive secretary type thing as something they can abuse. So, what happens when they get bored and want a bigger challenge, a more realistic experience, or something with an element of physicality?

It’s grim. It’s very grim. But, in the interest of balance, it’s not all bad news.

First and foremost, I’m sure there are plenty of men who would never send sexually violent threats to Cortana (or any actual human either). That’s good. On top of that, we’ve started to see more genderless virtual assistants recently. ChatGPT, for example, isn’t necessarily associated with any one gender. Apple also recently changed Siri’s default setting, so the voice isn’t automatically female. That’s also good.

It’s small progress, but it’s progress, nonetheless.

The bigger, more concrete problems

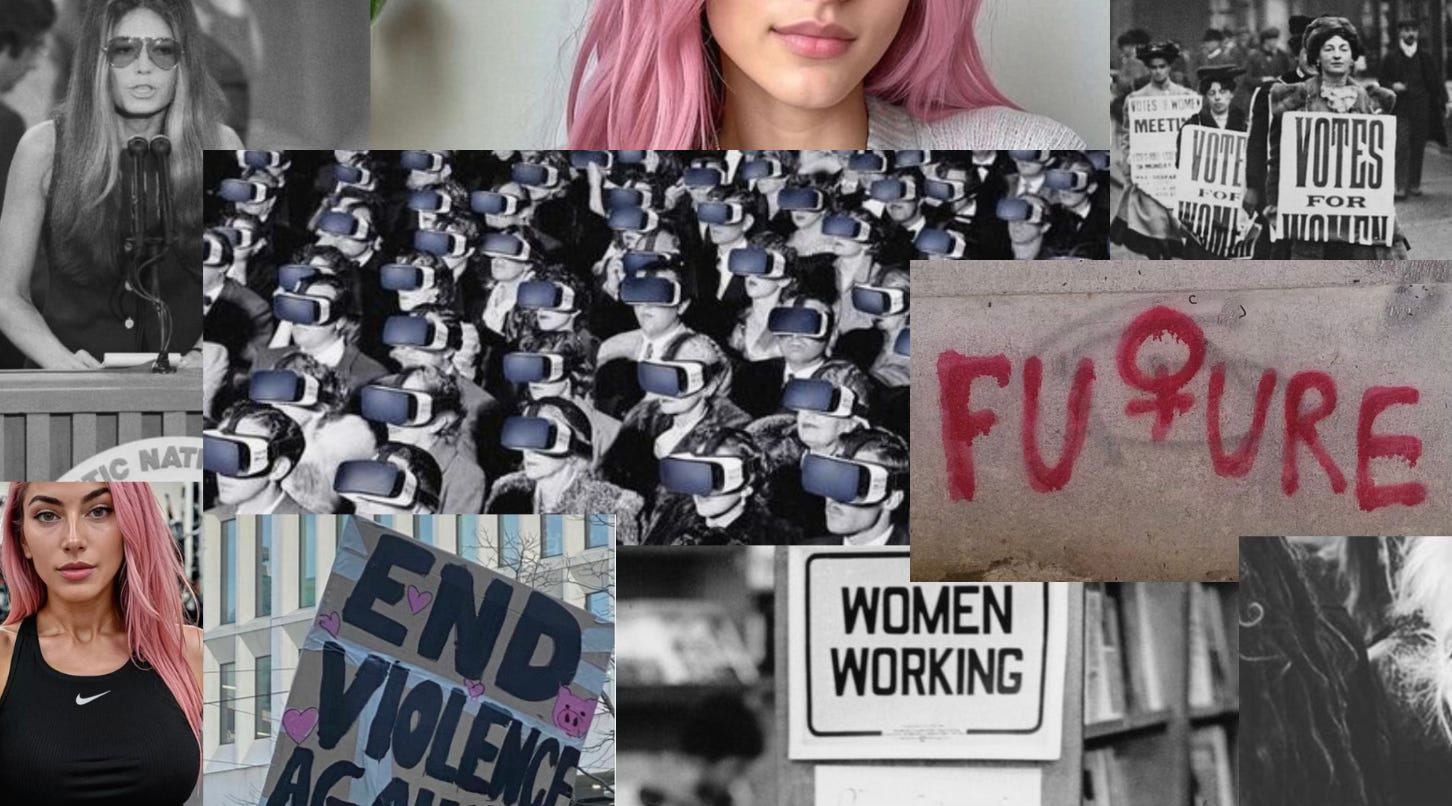

Progress in one arena, however, doesn’t make up for the all-out slaughter happening in another. And my biggest concern when it comes to AI is the fact that everything inherently bad or concerning about it seems to disproportionately affect women.

Let’s talk jobs. The World Economic Forum warns AI is likely to disrupt up to 85 million jobs by 2025. That’s not some far-off future threat — that’s next year. This is a problem for all of us, but it’s women who are particularly vulnerable. The McKinsey Global Institute found women are 1.5 times more likely than men to lose their jobs to automation. Woo.

Next up: beauty standards. AI has taken the impossibly beautiful instagrammer to a whole new and terrifying level. You’ve probably seen an AI influencer online, even if you didn’t realise it at the time. And when you do, you’ll see that they’re all impossibly glamorous, pore-less, human-adjacent things. High-tech drawings that are somehow prettier and richer and more sociable than you are!

And they’re specially designed to sell you shapewear and night cream and teeth whitening strips that they, themselves, will never need because — and I can’t stress this enough — they do not exist. They will never have eye-bags or cellulite or bloating or plaque or body hair. Because they don’t have bodies. (Side note,

wrote a GREAT piece on this specifically and you should read it).And then there’s an even more insidious issue: deepfake porn. Thanks to AI, we’ve entered a whole new world of non-consensual, AI-generated revenge porn. It’s terrifying. According to Sensity, a cybersecurity firm, 96% of all deepfake content online is non-consensual pornography, and guess what? It disproportionately targets women.

The final point here is that AI itself is biased because it’s trained on biased data. ChatGPT and other generative tools are trained on all the public content that exists on the internet — which means they’re trained on all the misogynistic content that exists on the internet (and there’s a lot of it). AI doesn’t, and will never, fix our biases, because it’s too busy beaming them right back at us.

Is there hope for the future?

Maybe.

We know AI is taking women’s jobs, making beauty standards more of a living nightmare than they already were, and perpetuating dodgy stereotypes.

But — and I don’t know if this is just a thinly veiled attempt to make myself feel less awful about still using it at work — I do also think it needs to be acknowledged that a lot of these problems aren’t really the fault of the AI itself, they’re the fault of the humans controlling it.

Blaming generative AI for the online harassment and exploitation of women is like blaming the existence of cars for a drunk driving incident. Yes, cars are powerful, dangerous, pieces of machinery. Yes, access to these machines needs to be monitored. But ultimately, the accident is still the fault of the irresponsible drunk behind the wheel.

It’s really, really, scary. But the technology itself isn’t what’s scary, as such.

What we’re doing with it is scary. How we’re letting people treat female voice assistants — and then women in real life — is scary. The fact we’re not providing enough support or regulation to protect women (or anyone else, for that matter) against AI-generated revenge porn is scary.

Be scared, but be vocal

As women, we have enough to be scared of. Of the guys walking behind us in dark car parks. Of never making make as much money as men. Of taking our eyes off our drinks at the bar. Of doctors and policy makers having more control over our bodies than we do. Of not being able to live up to society’s current beauty standards, but also of looking like we care too much about living up to society’s current beauty standards, of being called liars, of being called bossy, of being called bitches.

We don’t need to add lack of regulated AI to the mix.

And so, while I don’t pretend to have all the answers (or any answers, realistically), I do know AI itself wouldn’t have to be scary if humans just… did better.

And that means holding the tech companies accountable, working for more female and non-binary representation in STEM, regulating the regulators, calling out misogyny when you see it (even if that misogyny is directed at a robot), and always, always, always promoting intersectionality.

I’m a cis, white, woman. When I say I’m scared, I say it with the understanding that there are women of colour and trans women and other minority groups that have it way, way, worse than I do.

So my final comment is, be scared, yes, but be vocal where you can.

…And maybe go read something light and airy now to shake this one off (selfishly, I recommend my latest ode to Sally Rooney, but just like ChatGPT, I am biased).

About The Content Girl:

Opinions, insights, and the occasional marketing musing from a professional Content Writer giving writing in her own voice a go. You can expect:

Commentary on pop-culture/regular culture and the like

Insights/tips/information around professional Content Writing/Marketing and Digital Marketing

Personal essays (I’ll try to make these not insufferable, I swear)

Book reviews, recommendations, and roundups

The odd piece of flash fiction

This is a dark side of AI, something that people don't often think about (not even women themselves). Thank you so much for this article! Brings light on a not so common topic.

Ellen this is such a thoughtful piece, I can’t believe it hadn’t occurred to me sooner! Some really grim facts here. I work in digital marketing and avoid AI like the plague - it’s incredibly intimidating to be met with a technology that threatens to undermine everything you’ve been working towards. The introduction of AI influencers is disturbing on so many levels - never thought I’d be feeling grateful to have grown up comparing myself to photoshopped women in magazines. I can only imagine how distressing it will be for young girls to attempt to morph themselves into an entirely non-existent being to meet an ever changing standard. In this way, AI seems less progressive and more a way to hold women back again. A fantastic read, and so well written!!